What is Technology

One of the main themes of W. Brian Arthur’s book The Nature of Technology: What it Is and How it Evolves is: technology evolves.

As I reflected on the book, I found myself thinking about the opening sequence from Stanley Kubrick’s film 2001: A Space Odyssey. The film opens with a sequence called “Dawn of Man.” A group of apes learns how to use animal bones as tools and weapons. One ape throws the bone into the air. The camera follows the bone through the air and then, suddenly, we’re millions of years in the future seeing a spaceship with a similar shape.

The underlying message of the film sequence and cut is similar to the book’s core message: Humans have evolved along with our technology — and both will continue to evolve.

***

Context

It’s worth understanding a bit about the author and the research institute with which he’s chiefly affiliated, the Santa Fe Institute.

W. Brian Arthur is an economist. He was Morrison Professor of Economics and Population Studies at Stanford University from 1983 to 1996, and one of his key contributions was the concept of increasing returns. He was also one of the early pioneers of complexity theory, particularly as it applied to economics. In contrast to conventional neoclassical economics, which assumes rational agents, equilibrium, and elegant, balanced equations, complexity economics assumes a world in which agents differ from each other, have imperfect information, and are constantly changing and reacting. The world it models is more vibrant, changing, and organic, displaying emergent phenomena.

Technology plays a key role, hence the goal of the book to understand rigorously what technology is and how it changes (evolves). After laying the intellectual foundation of technology, W. Brian Arthur makes the link between technology and economics explicit.

***

The core building blocks of the argument

“…technology—the collection of artifacts and methods available to a society—creates new elements from those that already exist and thereby builds out.”

W. Brian Arthur starts by providing three definitions of technology—a singular, a plural, and a general:

A technology is a means to fulfill a human purpose.

Technology is an assemblage of practices and components.

Technology is the entire collection of devices and engineering practices available to a culture.

He offers three foundational principles to think about technology:

All technologies are combinations.

Each component of technology is itself in miniature a technology.

All technologies harness and exploit some effect or phenomenon, usually several.

He explains these principles as follows:

All technologies are combinations:

Technology consists of parts organized around a central concept or principle: “the method of the thing…”

The primary structure of a technology consists of a main assembly that carries out its base function plus a set of subassemblies that support this.

The assemblies, subassemblies, and individual parts are all executables; they are all technologies, which brings us to the second principle…

Each component of technology is itself in miniature a technology:

Technologies are built from a hierarchy of technologies

e.g.,

The F-35 fighter aircraft’s main purpose is to provide close air support, intercept enemy aircraft, suppress enemy radar defenses, and take out ground targets. It does so by combining sub-technologies that include: the wings; the engine; the avionics suite (or aircraft electronic systems); the landing gears; the flight control systems; the hydraulic system; and so forth.

And you can go further down the hierarchy, breaking down the engine: air inlet system, compressor system, combustion system, turbine system, nozzle system.

And further, breaking down the air inlet system, which Arthur does, and so on

He also extends the technology hierarchy upward, placing the F-35 into a larger system, the carrier wing, all the way up into a grand technology: a theater-of-war grouping, which consists of a carrier group supported by land-based air units, airborne refueling tankers, Naval Reconnaissance Office satellites, ground surveillance units, and marine aviation units.

All technologies harness and exploit some effect or phenomenon, usually several:

e.g., oil refining exploits vaporization/condensation; hammer exploits momentum; radiocarbon dating exploits carbon-14 decay; trucks exploit energy from fossil fuels and friction

Phenomena have to be harnessed, coaxed, and tuned to work effectively; hence, the need for supporting technologies (the subassemblies) that bring them together in a usable way

This allows W. Brian Arthur to provide an incredibly powerful way to characterize technology: A technology is a programming of phenomena to our purposes.

Another way to think about this is: Phenomena are the “genes” of technology

Here, W. Brian Arthur makes a fascinating and important detour to address an important implication of his principles:

I defined a technology as a means to a purpose. But there are many means to purposes and some of them do not feel like technologies at all. Business organizations, legal systems, monetary systems, and contracts are all means to purposes and so my definition makes them technologies. Moreover, their subparts—such as subdivisions and departments of organizations, say—are also means to purposes, so they seem to share the properties of technology. But they lack in some sense the “technology-ness” of technologies. So, what do we do about them? If we disallow them as technologies, then my definition of technologies as means to purposes is not valid. But if we accept them, where do we draw the line? Is a Mahler symphony a technology? It also is a means to fulfill a purpose—providing a sensual experience, say—and it too has component parts that in turn fulfill purposes. Is Mahler then an engineer? Is his second symphony—if you can pardon the pun—an orchestration of phenomena to a purpose?

He proposes that, yes, “…all means—monetary systems, contracts, symphonies, and legal codes, as well as physical methods and devices—are technologies.”

He dives into a discussion about money and the monetary system as technologies that is very relevant to Bitcoin, though he doesn’t mention Bitcoin

The reason, he suggests, that some technologies feel more technology-like than others is the following:

Conventional technologies, such as radar and electricity generation, feel like “technologies” because they are based upon physical phenomena. Nonconventional ones, such as contracts and legal systems, do not feel like technologies because they are based upon nonphysical “effects”—organizational or behavioral effects, or even logical or mathematical ones in the case of algorithms.

These are the core building blocks of his argument. The rest are implications and observations.

***

Deepening the argument

From there, W. Brian Arthur makes other fascinating observations:

Science and technology share a symbiotic relationship. Science uncovers phenomena, technology exploits them. But then technology helps science advance, uncovering more usable phenomena. And so on.

Standard engineering is the mechanism by which technologies come into being and evolve.

The principles above allow technology not to be viewed from the outside as stand-alone objects but rather from the inside:

…when we look from the inside, we see that a technology’s interior components are changing all the time, as better parts are substituted, materials improve, methods for construction change, the phenomena the technology is based on are better understood, and new elements become available as its parent domain develops. So a technology is not a fixed thing that produces a few variations or updates from time to time. It is a fluid thing, dynamic, alive, highly configurable, and highly changeable over time.

It’s here that he offers the main theme of the book: “…technology evolves by combining existing technologies to yield further technologies and by using existing technologies to harness effects that become technologies.”

W. Brian Arthur goes on to argue that a key method by which this happens is standard engineering. Standard engineering is problem-solving, and problem-solving is about combining technologies.

There’s a beautiful passage about engineering and creativity:

[Engineering] is a form of composition, of expression, and as such it is open to all the creativity we associate with [architecture, fashion, or music]” … [But] the reason engineering is held in less esteem than other creative fields is that unlike music or architecture, the public has not been trained to appreciate a particularly well-executed piece of technology. The computer scientist C. A. R. (Tony) Hoare created the Quicksort algorithm in 1960, a creation of real beauty, but there is no Carnegie Hall for the performance of algorithms to applaud his composition.

Creativity is key to inventing new, novel technologies…

Novel technologies are truly new technologies—inventions

He defines a novel technology as: “one that uses a principle new or different to the purpose in hand.”

It’s worth calling out here that this sounds a lot like Clayton Christensen’s definition of a disruptive technology

W. Brian Arthur dives deep into how these new principles are discovered. Ultimately, it’s about creativity:

At the creative heart of invention lies appropriation, some sort of mental borrowing that comes in the form of a half-conscious suggestion. …

At [the heart of invention] lies the act of seeing a suitable solution in action—seeing a suitable principle that will do the job, [which] in most cases arrives by conscious deliberation; it arises through a process of association—mental association.

When a novel technology emerges, it is crude. It works, but barely, and then begins to improve, develop.

Once the novel technology emerges, standard engineering kicks in, and the process of problem-solving and recombination takes over

As technologies improve, as they get stretched to solve more and more difficult problems, they become more complex—more subassemblies, more parts, etc.

But this has a cost:

Over time it becomes encrusted with systems and subassemblies hung onto it to make it work properly, handle exceptions, extend its range of application, and provide redundancy in the event of failure.

Note, again, how all this sounds very similar to Clayton Christensen’s theories: an existing solution “overshoots” the market; a cheaper, simpler, more convenient disruptive innovation with an entirely new approach emerges; it is initially inferior to the existing high end solutions so it targets the low end of the market; but then it improves much faster, ultimately overtaking the existing high end solutions.

Revolutions in the economy happen through domains of technology

Now, perhaps this is obvious, but technologies organize themselves into domains of similar technologies—and W. Brian Arthur admits as much

But then he points out that the domains of technology have a different and important character from individual technologies, particularly in how the impact the economy:

[Domains] are not invented; they emerge, crystallizing around a set of phenomena or a novel enabling technology, and building organically from these. They develop not on a time scale measured in years, but on one measured in decades—the digital domain emerged in the 1940s and is still building out. And they are developed not by a single practitioner or a small group of these, but by a wide number of interested parties.

Domains also affect the economy more deeply than do individual technologies. The economy does not react to the coming of the railway locomotive in 1829 or its improvements in the 1830s—not much at any rate. But it does react and change significantly when the domain of technologies that comprise the railways comes along. In fact, one thing I will argue is that the economy does not adopt a new body of technology; it encounters it. The economy reacts to the new body’s presence, and in doing so changes its activities, its industries, its organizational arrangements—its structures. And if the resulting change in the economy is important enough, we call that a revolution.

Domains have a life cycle, both of the technology and the interest/investment around them (as articulated by Carlota Perez)

Some domains, however, avoid the life cycle of youth, adulthood, and old age because they morph—one of the key technologies changes (vacuum tubes transistors to integrated circuits) or its main application changes (computation: scientific calculation in wartime to accounting to personal computing, etc.)—or it throws off new subdomains (e.g., computing and telecommunications birthed the internet, and it looks like now the internet and cryptography is birthing a new subdomain: crypto networks)

W. Brian Arthur then goes into detail of how technology domains and industries interact:

How does this happen? Think of the new body of technology as its methods, devices, understandings, and practices. And think of a particular industry as comprised of its organizations and business processes, its production methods and physical equipment. All these are technologies in the wide sense I talked of earlier. These two collections of individual technologies—one from the new domain, and the other from the particular industry—come together and encounter each other. And new combinations form as a result.

This is fascinating to consider and explains a lot. Barnes and Noble didn’t succeed from the internet; Amazon emerged. Taxis didn’t change from mobile; Uber emerged. Industries tend not to adopt technologies; they’re transformed by them.

W. Brian Arthur defines redomaining to mean that…

…industries adapt themselves to a new body of technology, but they do not do this merely by adopting it. They draw from the new body, select what they want, and combine some of their parts with some of the new domain’s, sometimes creating subindustries as a result. As this happens the domain of course adapts too. It adds new functionalities that better fit it to the industries that use it.

Technology evolves. All of the above sets the stage for W. Brian Arthur’s central argument.

Example: In the early 1900s, a few engineers were successful in adding a third electrode in a diode vacuum tube, making it easier to transmit and receive radio signals, thus birthing radio broadcasting; but, incidentally, the same technology in a different configuration could be a logic circuit, thus birthing modern computation

W. Brian Arthur makes a few central statements:

“Any solution to a human need—any novel means to a purpose—can only be made manifest in the physical world using methods and components that already exist in that world.”

“All technologies are birthed from existing technologies in the sense that these in combination directly made them possible.”

“…novel elements are directly made possible by existing ones. But more loosely we can say they arise from a set of existing technologies, from a combination of existing technologies. It is in this sense that novel elements in the collective of technology are brought into being—made possible—from existing ones, and that technology creates itself out of itself.”

In other words, he says, technology is autopoietic—“self-creating”—meaning…

“…every technology stands upon a pyramid of others that made it possible in a succession that goes back to the earliest phenomena that humans captured…[and] all future technologies will derive from those that now exist (perhaps in no obvious way) because these are the elements that will form further elements that will eventually make these future technologies possible.”

And from here it gets grand, truly mind-blowing:

Autopoiesis gives us a sense of technology expanding into the future. It also gives us a way to think of technology in human history. Usually that history is presented as a set of discrete inventions that happened at different times, with some cross influences from one technology to another. What would this history look like if we were to recount it Genesis-style from this self-creating point of view? Here is a thumbnail version.

In the beginning, the first phenomena to be harnessed were available directly in nature. Certain materials flake when chipped: whence bladed tools from flint or obsidian. Heavy objects crush materials when pounded against hard surfaces: whence the grinding of herbs and seeds. Flexible materials when bent store energy: whence bows from deer’s antler or saplings. These phenomena, lying on the floor of nature as it were, made possible primitive tools and techniques. These in turn made possible yet others. Fire made possible cooking, the hollowing out of logs for primitive canoes, the firing of pottery. And it opened up other phenomena—that certain ores yield formable metals under high heat: whence weapons, chisels, hoes, and nails. Combinations of elements began to occur: thongs or cords of braided fibers were used to haft metal to wood for axes. Clusters of technology and crafts of practice—dyeing, potting, weaving, mining, metal smithing, boat-building—began to emerge. Wind and water energy were harnessed for power. Combinations of levers, pulleys, cranks, ropes, and toothed gears appeared—early machines—and were used for milling grains, irrigation, construction, and timekeeping. Crafts of practice grew around these technologies; some benefited from experimentation and yielded crude understandings of phenomena and their uses.

In time, these understandings gave way to close observation of phenomena, and the use of these became systematized—here the modern era begins—as the method of science. The chemical, optical, thermodynamic, and electrical phenomena began to be understood and captured using instruments—the thermometer, calorimeter, torsion balance—constructed for precise observation. The large domains of technology came on line: heat engines, industrial chemistry, electricity, electronics. And with these still finer phenomena were captured: X-radiation, radio-wave transmission, coherent light. And with laser optics, radio transmission, and logic circuit elements in a vast array of different combinations, modern telecommunications

In this way, the few became many, and the many became specialized, and the specialized uncovered still further phenomena and made possible the finer and finer use of nature’s principles. So that now, with the coming of nanotechnology, captured phenomena can direct captured phenomena to move and place single atoms in materials for further specific uses. All this has issued from the use of natural earthly phenomena. Had we lived in a universe with different phenomena we would have had different technologies. In this way, over a time long-drawn-out by human measures but short by evolutionary ones, the collective that is technology has built out, deepened, specialized, and complicated itself.

☝️ STOP — read that again and savor it. It’s a masterpiece of writing, compressing all of technology and human history into four paragraphs and the articulation of an incredible idea: a Genesis-style reconstruction of technology evolution!

There are two forces that drive technology evolution

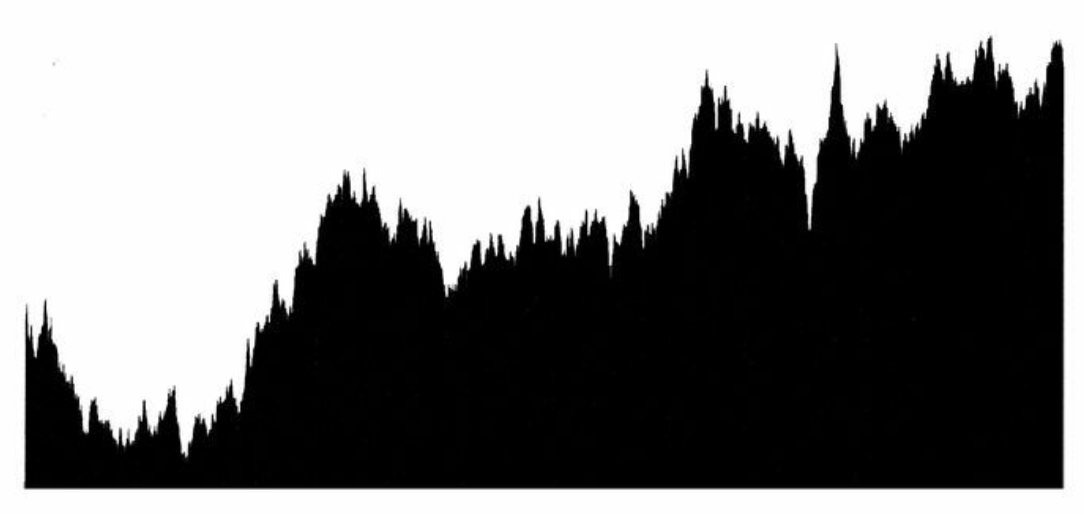

One force is combination, which is the ability of the existing collective technology to “supply” new technologies, whether by putting together existing parts and assemblies, or by using them to capture phenomena.

The other force is the “demand” for means to fulfill purposes, the need for novel technologies.

“Existing technologies used in combination provide the possibilities of novel technologies: the potential supply of them. And human and technical needs create opportunity niches: the demand for them. As new technologies are brought in, new opportunities appear for further harnessings and further combinings. The whole bootstraps its way upward.”

W. Brian Arthur describes an experiment that he and his colleague Wolfgang Polak conducted using a computer to simulate this evolution using simple logic circuits. I won’t go into it here because it’s complex, but the implication is pretty amazing (emphasis mine):

“We found that after sufficient time, the system evolved quite complicated circuits.”…

“In several of the runs the system evolved an 8-bit adder, the basis of a simple calculator. This may seem not particularly remarkable, but actually it is striking.”…

“…the chances of such a circuit being discovered by random combination in 250,000 steps is negligible. If you did not know the process by which this evolution worked, and opened up the computer at the end of the experiment to find it had evolved a correctly functioning 8-bit adder against such extremely long odds, you would be rightly surprised that anything so complicated had appeared. You might have to assume an intelligent designer within the machine.”

Meaning: evolution is powerful

W. Brian Arthur clarifies that, while this is evolution, it is not the same mechanism as Darwinian evolution, which is based on mutation and selection; this is combinatorial evolution

The economy is technology, and it evolves with technology.

W. Brian Arthur makes a subtle and powerful point that I had to read a number of times before I grasped it, particularly its significance

Traditionally, the economy is defined as a “system of production and distribution and consumption” of goods and services, as if the economy is a giant container for technology

W. Brian Arthur defines it differently: the economy is the set of arrangements and activities by which a society satisfies its needs; meaning…

The set of arrangements that form the economy include all the myriad devices and methods and all the purposed systems we call technologies. They include hospitals and surgical procedures. And markets and pricing systems. And trading arrangements, distribution systems, organizations, and businesses. And financial systems, banks, regulatory systems, and legal systems. All these are arrangements by which we fulfill our needs, all are means to fulfill human purposes. All are therefore by my earlier criterion “technologies,” or purposed systems. I talked about this in Chapter 3, so the idea should not be too unfamiliar. It means that the New York Stock Exchange and the specialized provisions of contract law are every bit as much means to human purposes as are steel mills and textile machinery. They too are in a wide sense technologies.

If we include all these “arrangements” in the collective of technology, we begin to see the economy not as container for its technologies, but as something constructed from its technologies. The economy is a set of activities and behaviors and flows of goods and services mediated by—draped over—its technologies. It follows that the methods, processes, and organizational forms I have been talking about form the economy.

The economy is an expression of its technologies.

…

The shift in thinking I am putting forward here is not large; it is subtle. It is like seeing the mind not as a container for its concepts and habitual thought processes but as something that emerges from these. Or seeing an ecology not as containing a collection of biological species, but as forming from its collection of species. So it is with the economy.

The implication:

Because the economy is an expression of its technologies, it is a set of arrangements that forms from the processes, organizations, devices, and institutional provisions that comprise the evolving collective; and it evolves as its technologies do. And because economy arises out of its technologies, it inherits from them self-creation, perpetual openness, and perpetual novelty. The economy therefore arises ultimately out of the phenomena that create technology; it is nature organized to serve our needs.

W. Brian Arthur paints a picture of a very complex thing, this economy, and the meta point is that it is very difficult to understand how it work:

The economy is not a simple system; it is an evolving, complex one, and the structures it forms change constantly over time. This means our interpretations of the economy must change constantly over time. I sometimes think of the economy as a World War I battlefield at night. It is dark, and not much can be seen over the parapets. From a half mile or so away, across in enemy territory, rumblings are heard and a sense develops that emplacements are shifting and troops are being redeployed. But the best guesses of the new configuration are extrapolations of the old. Then someone puts up a flare and it illuminates a whole pattern of emplacements and disposals and troops and trenches in the observers’ minds, and all goes dark again. So it is with the economy. The great flares in economics are those of theorists like Smith or Ricardo or Marx or Keynes. Or indeed Schumpeter himself. They light for a time, but the rumblings and redeployments continue in the dark. We can indeed observe the economy, but our language for it, our labels for it, and our understanding of it are all frozen by the great flares that have lit up the scene, and in particular by the last great set of flares.

Technology is becoming biological and vice versa

Technology is becoming biological: “…technologies are acquiring properties we associate with living organisms. As they sense and react to their environment, as they become self-assembling, self-configuring, self-healing, and “cognitive,” they more and more resemble living organisms. The more sophisticated and “high-tech” technologies become, the more they become biological.”

And biology is becoming technology: “As biology is better understood, we are steadily seeing it as more mechanistic.” … “…organisms and organelles [are] highly elaborate technologies. In fact, living things give us a glimpse of how far technology has yet to go. No engineering technology is remotely as complicated in its workings as the cell.”

Which means, per the above logic, that the economy is becoming biological—generative.

Our economy is not a system at equilibrium but rather an evolving, complex system

All of this means the economy is much more vibrant than we think, a thing of “messy vitality”

Economics itself is beginning to respond to these changes and reflect that the object it studies is not a system at equilibrium, but an evolving, complex system whose elements—consumers, investors, firms, governing authorities—react to the patterns these elements create.

…

Not only is our understanding of the economy changing to reflect a more open, organic view. Our interpretation of the world is also becoming more open and organic; and again technology has a part in this shift. In the time of Descartes we began to interpret the world in terms of the perceived qualities of technology: its mechanical linkages, its formal order, its motive power, its simple geometry, its clean surfaces, its beautiful clockwork exactness. These qualities have projected themselves on culture and thought as ideals to be used for explanation and emulation—a tendency that was greatly boosted by the discoveries of simple order and clockwork exactness in Galilean and Newtonian science. These gave us a view of the world as consisting of parts, as rational, as governed by Reason (capitalized in the eighteenth century, and feminine) and by simplicity. They engendered, to borrow a phrase from architect Robert Venturi, prim dreams of pure order.

And so the three centuries since Newton became a long period of fascination with technique, with machines, and with dreams of the pure order of things. The twentieth century saw the high expression of this as this mechanistic view began to dominate. In many academic areas—psychology and economics, for example—the mechanistic interpretation subjugated insightful thought to the fascination of technique. In philosophy, it brought hopes that rational philosophy could be founded on—constructed from—the elements of logic and later of language. In politics, it brought ideals of controlled, engineered societies; and with these the managed, controlled structures of socialism, communism, and various forms of fascism. In architecture, it brought the austere geometry and clean surfaces of Le Corbusier and the Bauhaus. But in time all these domains sprawled beyond any system built to contain them, and all through the twentieth century movements based on the mechanistic dreams of pure order broke down.

In its place is growing an appreciation that the world reflects more than the sum of its mechanisms. Mechanism, to be sure, is still central. But we are now aware that as mechanisms become interconnected and complicated, the worlds they reveal are complex. They are open, evolving, and yield emergent properties that are not predictable from their parts. The view we are moving to is no longer one of pure order. It is one of wholeness, an organic wholeness, and imperfection.

We fear technology that destroys our nature; we want technology that extends our nature

There’s two seemingly contradicting views: “…that technology is a thing directing our lives, and simultaneously a thing blessedly serving our lives…”

There’s a tension: if, as W. Brian Arthur proposes, all technology emerges from phenomena in nature, then technology is based in nature. “We trust nature—we feel at home in it—but we hope in technology":

There is an irony here. Technology, as I have said, is the programming of nature, the orchestration and use of nature’s phenomena. So in its deepest essence it is natural, profoundly natural. But it does not feel natural.

…

…we react to this deep unease in many ways. We turn to tradition. We turn to environmentalism. We hearken to family values. We turn to fundamentalism. We protest. Behind these reactions, justified or not, lie fears. We fear that technology separates us from nature, destroys nature, destroys our nature.

…

[But] to have no technology is to be not-human; technology is a very large part of what makes us human.

Robert Pirsig said:

The Buddha, the Godhead, resides quite as comfortably in the circuits of a digital computer or the gears of a cycle transmission as he does at the top of a mountain or in the petals of a flower.

“…our unconscious makes a distinction between technology as enslaving our nature versus technology as extending our nature. This is the correct distinction.”